Step into a realm where every Cyber exchanges unfolds on a fresh page

HashGPT isn't your typical tool; it's the whisperer behind your blog—an AI sidekick that makes understanding and interacting with a blog a breeze. This post takes you on a journey into the essence of HashGPT, where it paves the way for a seamless connection with Hashnode blogs

Intriguingly, HashGPT doesn't stop at just one blog. Imagine a space where anyone can effortlessly engage with any blog, breaking down communication barriers. Additionally, HashGPT introduces a Comment Concierge, poised to aptly respond to comments on your blog. This means your readers can connect, inquire, and discuss, all while enjoying the convenience of a thoughtful AI sidekick.

Let's jump right into the user flow

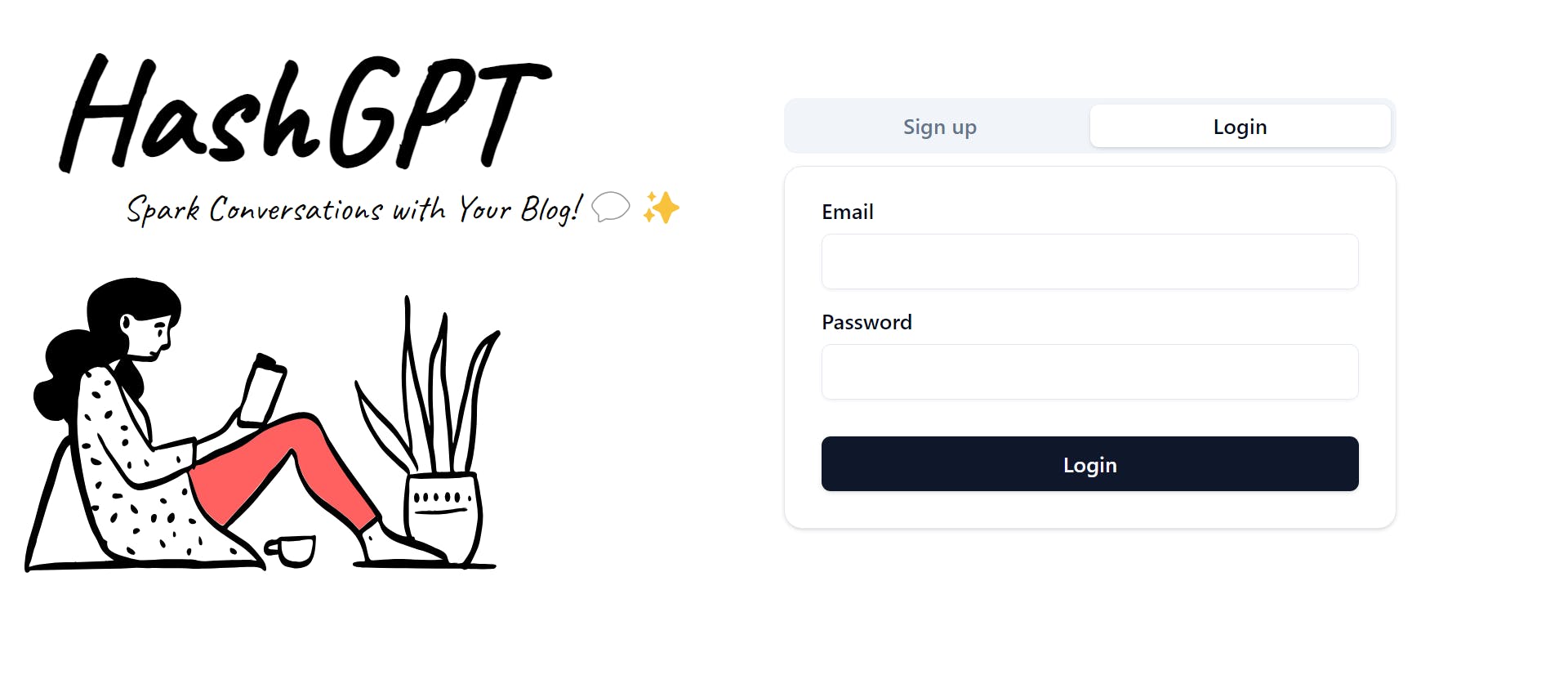

Login / Signup

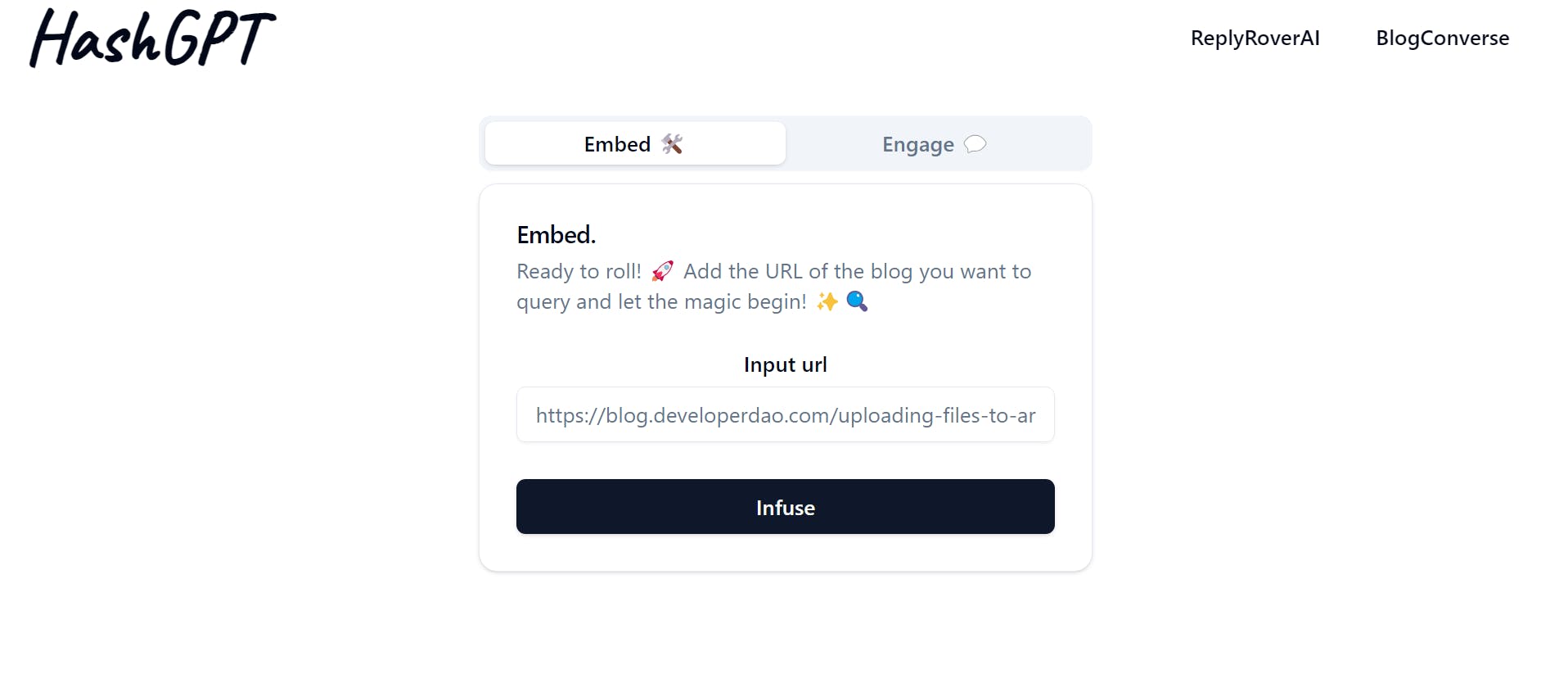

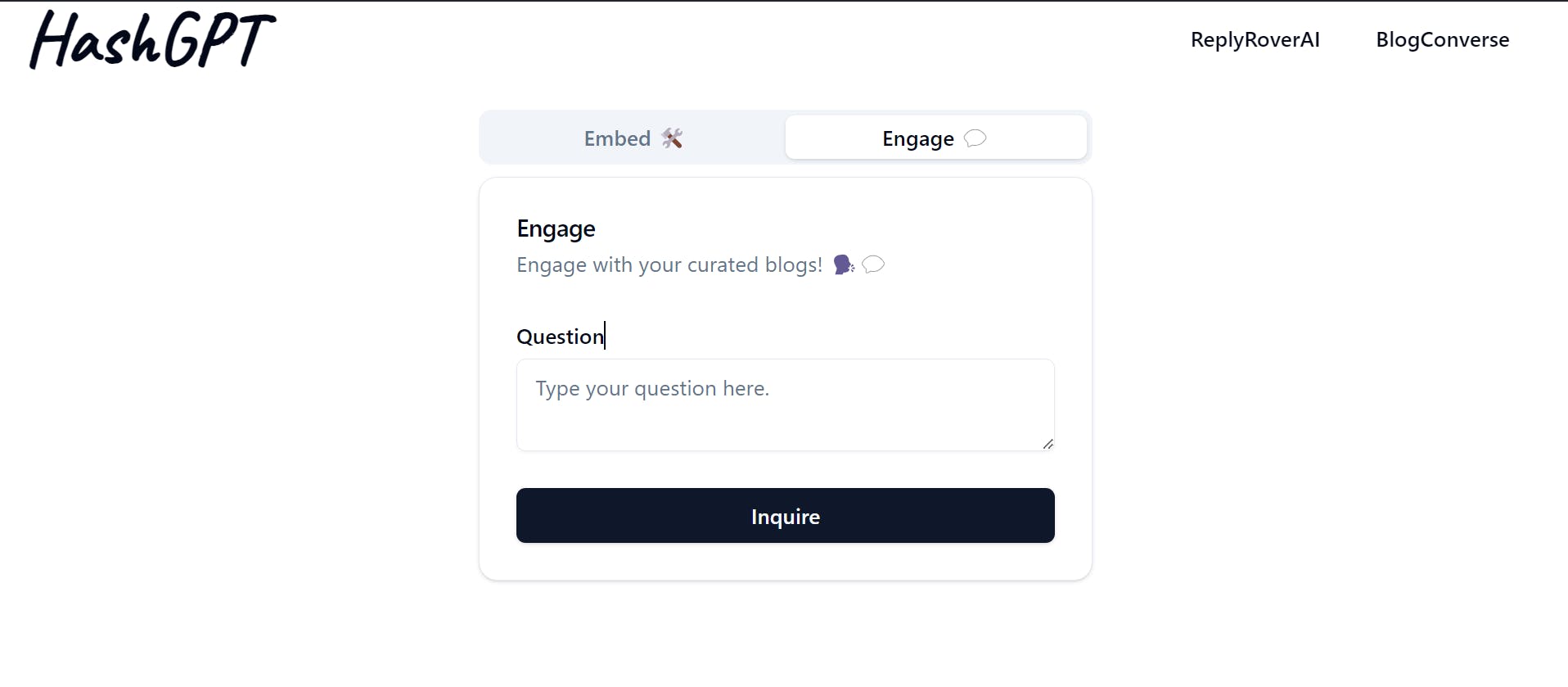

BlogConverse

Connect with any HashNode blog effortlessly. 🌐 Your go-to space for seamless conversations and limitless interaction with diverse blogs.

Step 1 : Embed

Just toss in the blog's URL and give the 'Infuse' button a tap– voila, you're linked up with the blog

Step 2 : Engage

Fire away your questions and ignite the answers! 💥🔍 Hit the 'Inquire' button to unravel the knowledge waiting within the document

ReplyRover AI🚀

Introducing ReplyRover AI – your ultimate blogging sidekick on HashNode! Picture this: whenever someone drops a question in your blog's comments, ReplyRover AI leaps into action, delivering swift and smart replies. - you and your trusty sidekick, ReplyRover AI

Setting up your Comment Concierge 🛠️

Just drop in your blog name, secret API key, and hit deploy🚀✨ Let your AI sidekick work its charm! 🤖✨

ReplyRover AI in action:

Time to plunge into the tech realm🚀✨

Tech stack used :

Next Js

Mongo DB

Weaviate (vector DB)

Fast API

How do we retrieve answers from the blog and generate responses?

Step 1 : Text Preprocessing

This step involves breaking the blog into chunks of sentences. Additionally, it includes importing necessary modules, with haystack being a crucial component

import nltk

from haystack.document_stores import WeaviateDocumentStore

from haystack.nodes import EmbeddingRetriever

from haystack.nodes import PromptNode, PromptTemplate, AnswerParser, BM25Retriever

from haystack.pipelines import Pipeline

from nltk.tokenize import sent_tokenize

nltk.download('punkt', quiet=True)

def split_into_sentences(text):

sentences = sent_tokenize(text)

return sentences

Step 2 : Create List of Documents

A list of dictionaries representing documents is created, each containing the sentence content and its index.

documents = [{"content": sentence, "meta": {"sentence_index": i}} for i, sentence in enumerate(sentences, 1)]

Step 3 : Weaviate Document Store Initialization

an instance of the Weaviate Document Store is initialized.The 'index' parameter is set to the username, allowing for efficient organization and retrieval of documents associated with that specific user. This ensures a structured and user-centric approach to managing information within the Weaviate

# Weaviate Document Store Initialization

ds = WeaviateDocumentStore(host="http://localhost", port=8080, embedding_dim=768, index=username)

# Creating a Collection by Specifying the Index

ds.write_documents(documents)

Step 4 : Embedding Retriever Initialization

The EmbeddingRetriever is initialized with the Weaviate Document Store and Sentence Transformer model, and embeddings in the document store are updated.

retriever = EmbeddingRetriever(document_store=ds, embedding_model="sentence-transformers/multi-qa-mpnet-base-dot-v1")

ds.update_embeddings(retriever)

Step 5 : Prompt Template Definition

A prompt template is defined with a specific structure and an output parser for processing the model's responses

eg. prompt templates

prompt_template = PromptTemplate(

prompt=""" ... """,

output_parser=AnswerParser(),

)

Step 6 : Prompt Node Initialization

A Prompt Node is created with the GPT-3.5-turbo-instruct model, OpenAI API key, and the defined prompt template.

prompt_node = PromptNode(

model_name_or_path="gpt-3.5-turbo-instruct", api_key=openai_api_key, default_prompt_template=prompt_template

)

Final Step : Pipeline Setup and execution

The code orchestrates a seamless process by configuring a pipeline that integrates both retriever and prompt node components. This pipeline efficiently retrieves information from a Weaviate document store and generates responses to a user's query using the GPT-3.5-turbo-instruct model. By blending retriever and generative approaches, it ensures a comprehensive and accurate response. Finally, the pipeline is executed with the provided query, completing the loop for a sophisticated and effective information retrieval system

generative_pipeline = Pipeline()

generative_pipeline.add_node(component=retriever, name="retriever", inputs=["Query"])

generative_pipeline.add_node(component=prompt_node, name="prompt_node", inputs=["retriever"])

response = generative_pipeline.run(query)

resources :Embedding Retrieval , Generative QA

Video demo 🎬:

github link....

Future improv..

While building, I dreamt of more; alas, time played the constraint card. Here's what I couldn't squeeze in!

an AI agent with contextual awareness of previous replies or chats for seamless and coherent conversations

Reply Rover AI, tailored to navigate and extract insights exclusively from selected documents

ReplyRover AI, to distinguish between regular comments and questions

Conclusion

As I conclude this blog, I've traversed numerous challenges, acquiring invaluable insights into the realms of frontend, backend, and AI. With a touch of excitement, I anticipate taking this project to new heights. The features implemented serve as a blueprint for enhancing user experience and engagement.

Finally, I express my thanks to Hashnode for the wonderful opportunity to build something with their APIs.